2014 Course Syllabus

Basic Course Information

Instructor: Dr.

Christoph F. Eick

office hours (573 PGH): MO 4-5p and WE 1-2p (through April 30)

e-mail: ceick@uh.edu

Teaching Assistant: Wellington Cabrera

office hours (575 PGH): MO 1-2p and MO 4-5p

Wellington's COSC 6342 Website

class meets: M/W 2:30-4p

cancelled classes: none

Makeup class:

Course Materials

Required Text:

Ethem Alpaydin, Introduction to Machine Learning, MIT Press, Second Edition,

2010.

Recommended Texts: Tom Mitchell, "Machine Learning", McGraw-Hill, 1997.

Christopher M. Bishop, Pattern Recognition and Machine Learning, Springer, 2006.

Some Important Dates for the Spring 2014 Semester

We., March 5: 45 minute review of course material

Mo., March 17: Midterm Exam

January 20, March 10, March 12: No lecture

Mo., April 21 and We., April 23: Student presentations Project2

Mo., April 28: last lecture

Mo., April 28: 45 minute review of course material

Mo., May 5, 2p: Final Exam

Course Elements and their Weights

Due to the more theoretical nature of machine learning there will

be a little more emphasis on exams and on understanding the course material

presented in the lecture and textbook. However, there will be 2-3

projects and 2-3 graded homeworks (typically containing 15-20 problems total) which count about 40%

towards the overall grade; 3-4 of these activities will be group

projects / group homeworks. In 2014 the weights of the different

parts of the course are as follows:

Midterm Exam 26%

Final Exam 32%

Attendance 1%

Project1: 20%

Project2: 13%

Homeworks: 8%

2014 Course Projects, Reviews and Homeworks

Project1: Q-Learning for a Pickup

Dropoff World (

PD-World)

Homework1 (Group Homework; usually 3 students)

Homework Groups:

Group1:

Aleti,Sampath Vinayak

Barman,Arko

Bector,Shiwani

Group2:

Cao,Can

Chen,Juan

Chilluveru,Sri Lakshmi

Group3:

Dhawad,Priyanka

Ghosh,Sayan

Gopisetti,Narayana Jagdeesh

Group4:

Gopisetti,Satya Deepthi

Khalil,Nacer

Kulkarni,Amita A

Group5:

Liu,Tzu Hua

Majeti,Dinesh

Matusevich,David Sergio

Group6:

Memariani,Ali

Miao,Yang

Mirsharif,Seyyedeh Qazale

Group7:

MohammadMoradi,SeyyedHessamAldin

Mughal,Zakariyya Saeed

Nandamuri,Anil Kumar

Group8:

Nguyen,Toan Bao

Pavuluri,Bhagyasri

Ponnada,Venkata Sai Karthik

Group9:

Ravipati,Prudhvi

Rayanapati,Krishna Swathi

Sant,Prajakta Avinash

Group10:

Siebeneich,Eric J.

Uppaluri,Chaitanya

Wei,Li

Group11:

Wu,Yuhang

Xing,Yutao

Yang,Yajun

Zhang,Xiaolu

Review 1 March 5, 2014 (contains solutions to

the 2013 Homework1 and a few other problems from exams and other homeworks;

also briefly discusses some Solution

Sketches of the 2013 Midterm Exam)

Homework2&3 (final draft;

same groups as homework1; due April 15, 11p)

Project2: Making Sense of Data-Apply Machine Learning to an Interesting Dataset/Problem (Group Project; Discussion Project2 2014,

More on Project2)

Project2 Groups:

Group A:

Aleti,Sampath Vinayak

Gopisetti,Satya Deepthi

Khalil,Nacer

Kulkarni,Amita A

Group B:

Barman,Arko

Bector,Shiwani

Chilluveru,Sri Lakshmi

Nguyen,Toan Bao

Group C:

Cao,Can

Chen,Juan

Pavuluri,Bhagyasri

Siebeneich,Eric J.

Group D:

Dhawad,Priyanka

Ghosh,Sayan

Gopisetti,Narayana Jagdeesh

Group E:

Liu,Tzu Hua

Majeti,Dinesh

Matusevich,David Sergio

Group F:

Memariani,Ali

Miao,Yang

Mirsharif,Seyyedeh Qazale

MohammadMoradi,SeyyedHessamAldin

Group G:

Mughal,Zakariyya Saeed

Nandamuri,Anil Kumar

Uppaluri,Chaitanya

Wei,Li

Group H:

Ponnada,Venkata Sai Karthik

Ravipati,Prudhvi

Rayanapati,Krishna Swathi

Sant,Prajakta Avinash

Group I:

Wu,Yuhang

Xing,Yutao

Yang,Yajun

Zhang,Xiaolu

Review2 on Monday, April 28, 2014

(Exam3 in 2009,

2013 Machine Learning Final Exam,

Solution Sketches 2011 Homework3)

Course Topics

Topic 1: Introduction to Machine Learning

Topic 2: Supervised Learning

Topic 3: Bayesian Decision Theory (excluding Belief Networks)

Topic 5: Parametric Model Estimation

Topic 6: Dimensionality Reduction Centering on PCA

Topic 7: Clustering1: Mixture Models, K-Means and EM

Topic 8: Non-Parametric Methods Centering on kNN and density estimation

Topic 9: Clustering2: Density-based Approaches

Topic 10 Decision Trees

Topic 11: Comparing Classifiers

Topic 12: Combining Multiple Learners

Topic 13: Support Vector Machines

Topic 14: More on Kernel Methods

Topic 15: Naive Bayes' and Belief Networks

Topic 16: Neural Networks

Topic 17: Active Learning

Topic 18: Reinforcement Learning

Topic 19: Hidden Markov Models

Topic 20: Computational Learning Theory

Topics covered and order of Topic Coverage in 2014: Topic1, Topic18, Topic2, Topic3,

Topic5, Topic6, Topic7, Topic8, Topic10, Topic12, Topic11, Topic15, Topic19, Topic13,

Topic14, and Topic20.

Prerequisites

The course is mostly self-contained. However, students taking the course

should have

sound software development skills, and some basic knowledge of

statistics.

2014 Transparencies and Other Teaching Material

Course Organization ML Spring 2014

Topic 1: Introduction to Machine Learning(Eick/Alpaydin

Introduction, Tom Mitchell's Introduction

to ML---only slides 1-8 and 15-16 will be used)

Topic 2: Supervised Learning

(examples of classification techniques: Decision

Trees, k-NN)

Topic 3: Bayesian Decision Theory (excluding Belief Networks) and Naive Bayes (Eick on Naive Bayes)

Topic 4: Using Curve Fitting as an Example to Discuss Major Issues in ML (read

Bishop Chapter1 in conjuction with this material; not covered

in 2011)

Topic 5: Parametric Model Estimation

Topic 6: Dimensionality Reduction Centering on PCA

(PCA Tutorial, Arindam

Banerjee's More Formal Discussion of the Objectives of

Dimensionality Reduction)

Topic 7: Clustering1: Mixture Models, K-Means and EM

(Introduction to Clustering, Modified Alpaydin transparencies,

Top 10 Data Mining Algorithms paper)

Topic 8: Non-Parametric Methods Centering on kNN and Density

Estimation(kNN, Non-Parametric

Density Estimation, Summary Non-Parametric

Density Estimation, Editing and

Condensing Techniques to Enhance kNN, Toussant's survey paper on

editing, condesing and proximity graphs)

Topic 9: Clustering 2: Density-based Clustering

(DBSCAN paper,

DENCLUE2 paper)

Topic 10: Decision Trees

Topic 11: Comparing Classifiers

Topic 12: Ensembles: Combining Multiple Learners

for Better Accuracy

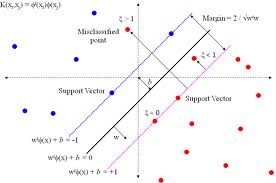

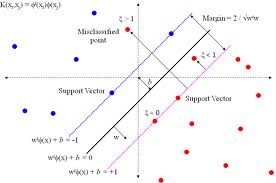

Topic 13: Support Vector Machines (Eick: Introduction

to Support Vector Machines, Alpaydin on

Support Vectors and the Use of Support Vector Machines for

Regression, PCA, and Outlier Detection (only transperencies which

carry the word "cover" will be discussed),

Smola/Schoelkopf Tutorial on Support Vector

Regression)

Topic 14: More on Kernel Methods(Arindam

Banerjee on Kernels,

Nuno Vasconcelos Kernel Lecture,

Bishop on Kernels; only transparencies 13-25 and

30-35 of the excellent Vasconcelos(

Homepage) slides 13-19, 22-24, 30-35

will be covered in 2013)

Topic 15: Naive Bayes and Belief Networks(Eick on Naive Bayes,

Eick on Belief Networks (used in the lecture),

Bishop on Belief Networks (not used in

the lecture, but might be useful for preparing

for the final exam)

Topic 16: Successful Application of Machine Learning

Topic 17: Active Learning (might be covered in 2014, if enough time)

Topic 18: Reinforcement Learning (Alpaydin on RL

(not used),

Eick on RL---try to understand those

transparencies; Using Reinforcement

Learning for Robot Soccer,

Sutton "Ideas of

RL" Video (to be shown and discussed in part in 2013),

Kaelbling's RL Survey Article---read

sections 1, 2, 3, 4.1, 4.2, 8.1 and 9 centering on what was

discussed in the lecture)

Topic 19: Brief Introduction to Hidden Markov Models

(HMM in BioInformatics)

Topic 20: Computational Learning Theory(Greiner

on PAC Learning,...)

Some old Exams: 2009 Exam2 Solution

Sketches, 2009 Exam3 Solution

Sketches, 2014 Midterm Exam Solution Sketches.

Remark: The teaching material will be extended and possibly

corrected during the course of the semester.

Grading

Each student has to

have a weighted average of 74.0 or higher in the

exams of the course in order to receive a grade of "B-" or better

for the course.

Students will be responsible for material covered in the

lectures and assigned in the readings. All homeworks and

project reports are due at the date specified.

No late submissions

will be accepted after

the due date. This policy will be strictly enforced.

Translation number to letter grades:

A:100-90 A-:90-86 B+:86-82 B:82-77 B-:77-74 C+:74-70

C: 70-66 C-:66-62 D+:62-58 D:58-54 D-:54-50 F: 50-0

Only machine written solutions to homeworks and assignments

are accepted (the only exception to this point are figures and complex formulas) in the assignments.

Be aware of the fact that our

only source of information is what you have turned in. If we are not capable to understand your

solution, you will receive a low score.

Moreover, students should not throw away returned assignments or tests.

Students may discuss course material and homeworks, but must take special

care to discern the difference between collaborating in order to increase

understanding of course materials and collaborating on the homework /

course projects. We encourage students to help each other understand course

material to clarify the meaning of homework problems or to discuss

problem-solving strategies, but it is not permissible for one

student to help or be helped by another student in working through individual

homework problems and in individual course projects. If, in discussing course materials

and problems, students believe that their like-mindedness from such discussions could be

construed as collaboration on their assignments, students must cite each

other, briefly explaining the extent of their collaboration. Any

assistance that is not given proper citation may be considered a violation

of the Honor Code, and might result in obtaining a grade of F

in the course, and in further prosecution.

2013 Course Projects and Homeworks

Project1 (Project Description Project1): Making Sense of Data---Apply Machine Learning to a Dataset (Group Project; typically 2 students per group)

Project 2: Read, Understand, Summarize and Review a Machine Learning Paper

(Project2

Post-Analysis; Individual Project Part1;

group project Part2; 6 paper candidates to review include:

C1, C2, C3, C4, C5 and C6.

Papers on Reviewing: RV1, RV2)

Project 3: Application and Evaluation

of Temporal Difference Learning (Individual Project;

RST-World)

Homework1 (Individual)

Homework2 (Group Homework)

Homework3 (Individual)

2011 Homeworks and Projects

Graded Homework1

Graded Homework2

Graded Homework3+4

Project1: Using Machine Learning to Make Money in Horse

Race Betting (HorseRaceExample,

Project1 Discussions,

Preference Learning Tutorial,

Wolverhampton Statistics)

Project2: Group Project---Exloring

a Subfield of Machine Learning (Project2

Group Presentation Schedule).

Project3: Application and Evaluation of Temporal Difference

Learning (Project

Description, RST-World)

Master Thesis and Dissertation Research in Data Mining and Machine Learning

If you plan to perform a dissertation or Master thesis project in the areas of

data mining or machine learning, I strongly recommend

to take the "Data Mining" course; moreover, I also suggest to take at least one, preferably two, of the following

courses: Pattern Classification (COSC 6343), Artificial

Intelligence (COSC 6368) or Machine Learning (COSC 6342). Furthermore, knowing

about evolutionary computing (COSC 6367) will be helpful, particularly

for designing novel data mining algorithms.

Moreover, having basic knowledge in data structures, software design, and databases is important when conducting

data mining projects; therefore, taking COSC 6320, COSC 6318 or COSC 6340 is a also good choice.

Moreover, taking a course that teaches high preformance computing is also

desirable, because most data mining algorithms are very resource intensive.

Because a lot of data mining projects have to deal with images, I

suggest to take at least one of the many

biomedical image processing courses that are offered in our curriculum. Finally, having some knowledge

in the following fields is a plus: software engineering, numerical optimization techniques, statistics, and data visualization. Also be aware of the fact that having sufficient background in the above listed areas is a prerequisite for consideration for a thesis or dissertation project in the area of data mining. I will not serve as your MS thesis or dissertation advisor, if you have do not have basic knowledge

in data mining, machine learning, statistics and related areas. Similarly, you

will not be hired as a RA for a

data mining project without having some background in data mining.

Machine Learning Resources

Soybean Grand Challenge: Make some money with Machine

Learning!

ICML 2013 (ICML is the #1 Machine

Learning Conference)

Carlos Guestrin's

2009 CMU Machine Learning Course

Andrew Ng's Stanford

Machine

Learning Course

Andrew Moore's Statistical Data Mining Tutorial

Christoph Bishop IET/CBS Turing Lecture

Alpaydin Textbook

Webpage